Best AI Comparison 2025: Complete Guide to Top Models, Benchmarks & Rankings

The artificial intelligence landscape in 2025 has transformed into an intensely competitive ecosystem where choosing the right AI model can mean the difference between exceptional productivity and wasted resources. With over 50+ advanced language models now publicly available, each with distinct strengths, pricing structures, and specialized capabilities, making an informed decision requires understanding not just performance metrics, but real-world applications, cost implications, and architectural differences. This comprehensive guide examines the best AI models currently available, compares them across critical dimensions, and provides actionable recommendations tailored to your specific use case.

Understanding AI Model Comparison in 2025

The evolution of artificial intelligence has accelerated dramatically since 2024, introducing unprecedented capabilities and complexity in model evaluation. Unlike previous years when comparing AI models meant examining a handful of options, 2025 presents a market where intelligent model selection requires understanding benchmarking methodologies, performance metrics, pricing structures, and practical real-world capabilities.

Modern AI comparison encompasses more than raw intelligence scores. Today’s decision-makers must evaluate latency (response speed), throughput (queries handled per second), context windows (how much information a model can process simultaneously), cost-efficiency, multimodal capabilities (handling text, images, video, and audio), and specialized domain expertise across coding, creative writing, research, and reasoning tasks.

Top AI Models: Head-to-Head Comparison

1. OpenAI’s GPT Series: The Market Leader

Latest Model: GPT-5 and GPT-5 Mini (as of November 2025)

OpenAI maintains its commanding position in the AI landscape with GPT-5, delivering exceptional versatility across content creation, coding, reasoning, and multimodal tasks. The model represents the industry benchmark for general-purpose artificial intelligence, with 46.6 billion monthly visits demonstrating unmatched market penetration and user trust.

Key Capabilities:

- Exceptional performance in creative writing and content generation

- Strong coding assistance across Python, JavaScript, and specialized frameworks

- Advanced reasoning for complex problem-solving tasks

- Seamless multimodal processing with images and text

- Reliable instruction-following and nuanced language understanding

Benchmarking Performance: GPT-5 achieves approximately 90% pass rate on complex coding tasks and demonstrates superior performance in creative content generation. The model excels in collaborative workflows where human-AI interaction requires consistent quality and reliability.

Pricing Structure:

- GPT-5: $1.25 per million input tokens, $10.00 per million output tokens

- GPT-5 Mini: $0.25 per million input tokens, $2.00 per million output tokens

- ChatGPT Plus: $20 per month for unlimited access

Ideal For: Content creators, software developers, research professionals, and business users requiring reliable, general-purpose artificial intelligence with industry-standard performance.

2. Anthropic’s Claude: The Reasoning Champion

Latest Model: Claude Opus 4 and Sonnet 4 (as of November 2025)

Anthropic has positioned Claude as the premium choice for professionals requiring nuanced reasoning, ethical considerations, and high-quality outputs with minimal hallucinations. Benchmark testing reveals Claude achieving 89.3% average score across multiple real-world coding and creative tasks, often matching or exceeding competing models.

Distinguished Features:

- Exceptionally low hallucination rates and conservative accuracy

- Sophisticated understanding of nuance, context, and subtlety

- Superior performance in academic writing, technical documentation, and editorial tasks

- Advanced ethical reasoning with built-in safety considerations

- 200,000-token context window supporting extensive document analysis

Real-World Coding Performance: Claude Sonnet 4 ranked as the top performer in JavaScript maze-solving tasks (93/100) and FastAPI authentication implementations, demonstrating architectural clarity and security-conscious code generation.

Pricing Model:

- Claude Opus 4: $0.30 per million input tokens, $1.50 per million output tokens

- Claude Sonnet 4: $0.20 per million input tokens, $1.00 per million output tokens

- Claude Haiku: $0.08 per million input tokens, $0.40 per million output tokens

Best For: Software engineers, academic researchers, content specialists, and organizations prioritizing accuracy, safety, and ethical considerations over raw speed.

3. Google Gemini: The Multimodal Powerhouse

Latest Version: Gemini 2.5 Pro and Gemini 2.5 Flash (November 2025)

Google’s Gemini series represents a technological leap in multimodal AI capabilities, combining text, image, video, and audio processing within a unified architecture. With a 2 million token context window, Gemini enables processing of entire books, research papers, and video content in single interactions.

Standout Capabilities:

- Native multimodal processing: text, images, video, and audio in one prompt

- Exceptional long-context understanding for document analysis

- Real-time web search integration within responses

- Superior visual reasoning and image interpretation

- Cost-effective pricing for high-volume applications

Performance Highlights: Gemini 2.5 achieved first-place ranking in summarization tasks (89.1% accuracy) and tied for best technical assistance benchmarking (Elo score of 1420), demonstrating consistent quality across diverse applications.

Pricing Breakdown:

- Gemini 2.5 Pro: $1.25-$2.50 per million input tokens (tiered), $10-$15 per million output

- Gemini 2.5 Flash: $0.15 per million input tokens, $0.60 per million output

- Free tier: Limited queries with standard Gemini model

Perfect For: Professionals requiring long-document analysis, video understanding, multimodal content creation, and cost-conscious teams operating at scale.

4. Meta’s Llama: Open-Source Accessibility

Current Release: Llama 3.2 (Multiple sizes: 8B, 70B parameters)

Meta’s Llama series democratizes access to advanced AI by providing open-source models that can run locally on consumer hardware. While not matching closed-source models in raw performance, Llama offers unmatched customization, privacy advantages, and cost elimination for organizations with technical infrastructure.

Open-Source Advantages:

- Complete model transparency and auditability

- Local deployment eliminating cloud dependencies

- Full customization and fine-tuning capabilities

- Zero licensing costs and usage fees

- Privacy-preserving deployment options

Technical Specifications: Llama 3.2 operates with 8 billion to 70 billion parameters, allowing deployment across devices from smartphones to enterprise servers. The multimodal 90B variant handles images and text simultaneously.

Best Applications: Research institutions, privacy-sensitive enterprises, AI developers requiring fine-tuning, and organizations with in-house technical teams seeking complete autonomy.

5. xAI’s Grok: Real-Time Intelligence

Latest Release: Grok-3 (Standard, Fast, and Mini variants)

Grok emerges as a specialized AI model emphasizing real-time information retrieval, creative writing excellence, and advanced reasoning capabilities. Integrated within the X (formerly Twitter) ecosystem, Grok provides unique real-time data access unavailable to competing models.

Distinguishing Features:

- Advanced creative writing and humor generation

- Real-time access to current events and trending information

- Specialized reasoning modes: “Think” and “Big Brain” for complex problems

- Strong coding performance across multiple languages

- Integration with X platform for social media-optimized content

Pricing Structure:

- Grok-3 Standard: $3.00 per million input tokens, $15.00 per million output

- Grok-3 Fast: $5.00 per million input tokens, $25.00 per million output

- Grok-3 Mini: $0.30 per million input tokens, $0.50 per million output

Ideal For: Content creators, journalists, social media managers, and professionals requiring current information integration within AI-generated responses.

6. DeepSeek: The Efficiency Leader

Latest Models: DeepSeek R1 and V3 (Open-source available)

DeepSeek represents a breakthrough in cost-efficiency and open-source accessibility, delivering performance approaching commercial models at 99% lower cost compared to premium alternatives. The model achieves this through innovative architecture optimizations and efficient parameter allocation.

Performance Advantages:

- Budget-friendly pricing enabling mass deployment

- Exceptional reasoning for mathematical and coding tasks

- Fully open-source models with local deployment options

- Rapid inference speed supporting real-time applications

- Emerging strong competitor in reasoning benchmarks

Benchmark Results: DeepSeek achieved 88.0% average score across comprehensive coding tests, matching or exceeding expensive alternatives while maintaining a tenth of the operational cost.

Pricing Comparison:

- DeepSeek R1/V3: $0.028 per million input tokens, $0.042 per million output tokens

- Open-source versions: Free to download and self-host

Recommended For: Cost-conscious businesses, research teams, developers building AI-powered startups, and organizations optimizing for long-term expenditure reduction.

Comprehensive AI Model Comparison Table

| Model | Input Cost ($/M) | Output Cost ($/M) | Context Window | Best For | Rating |

|---|---|---|---|---|---|

| GPT-5 (OpenAI) | $1.25 | $10.00 | 128K tokens | General-purpose, creativity | 9.2/10 |

| Claude Opus 4 | $0.30 | $1.50 | 200K tokens | Reasoning, accuracy | 9.1/10 |

| Gemini 2.5 Pro | $1.25 | $10.00 | 2M tokens | Multimodal, video | 8.9/10 |

| Gemini 2.5 Flash | $0.15 | $0.60 | 2M tokens | Speed, cost efficiency | 8.7/10 |

| Grok-3 Standard | $3.00 | $15.00 | 128K tokens | Real-time, creative | 8.3/10 |

| DeepSeek R1 | $0.028 | $0.042 | 128K tokens | Budget operations | 8.2/10 |

| Llama 3.2 (Open) | $0.00 | $0.00 | 128K tokens | Local deployment | 7.9/10 |

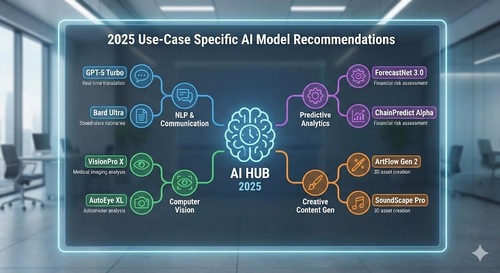

AI Comparison by Use Case: Expert Recommendations

Best AI for Software Development & Coding

Clear Winner: Claude Opus 4 and GPT-5 tie for superiority in coding tasks, with complementary strengths.

Claude Opus 4 achieved perfect 95/100 scores in JavaScript implementation quality and test handling, producing code that prioritizes elegance, readability, and security. The model excels in architectural decisions and best practices implementation. GPT-5 demonstrates equally strong performance with slightly faster inference speeds, making it preferable for real-time development workflows where speed matters.

For Budget-Conscious Teams: Gemini 2.5 Flash delivers 87-88% of Claude’s coding quality at 5% of the cost, presenting an excellent value proposition for teams balancing performance against budget constraints.

Best AI for Content Creation & Academic Writing

Recommended Choice: Claude Sonnet 4 for sophisticated, nuanced writing; GPT-5 for creative flexibility.

Claude’s sophisticated language understanding produces exceptionally polished academic writing, editorial content, and professional communications. The model demonstrates superior contextual awareness and writing nuance. GPT-5 excels in creative storytelling, marketing copy, and diverse content styles requiring varied tones and perspectives.

Alternative Option: Perplexity AI for research-backed writing integrating current sources and citations, eliminating hallucinations about recent events.

Best AI for Research & Data Analysis

Top Recommendation: Gemini 2.5 Pro with its 2 million token context window.

The massive context window enables uploading entire research papers, datasets, and documents for comprehensive analysis within a single interaction. Claude’s 200K token window provides secondary advantage for focused research tasks. For researchers requiring real-time information, Perplexity AI integrates web search to eliminate information gaps.

Best AI for Multimodal Tasks: Image, Video, Audio

Clear Leader: Gemini 2.5 Pro and Gemini 2.5 Flash excel in native multimodal processing.

These models simultaneously process text, images, video footage, and audio within single prompts, supporting document analysis with embedded images, video transcription, and audio-to-text conversion. GPT-4o Vision provides secondary multimodal capability with higher cost.

Best AI for Real-Time Information & Current Events

Specialist Choice: Grok-3 and Perplexity AI, both integrating real-time web search.

These models eliminate hallucinations about current events by retrieving live information during query processing. Traditional models like Claude and GPT-5 provide training data knowledge but cannot access information after their training cutoff.

Best AI for Budget Operations & Cost Optimization

Unbeatable Value: DeepSeek R1/V3 and open-source Llama 3.2.

DeepSeek achieves comparable performance to premium models at 99% lower cost. For maximum cost elimination, self-hosted Llama 3.2 requires only hardware investment with zero per-token fees. Organizations processing millions of queries should seriously evaluate these alternatives.

Understanding AI Benchmarks & Performance Metrics

What Are LLM Leaderboards?

LLM (Large Language Model) leaderboards provide standardized evaluation platforms comparing AI models across consistent criteria. Rather than subjective assessments, leaderboards employ rigorous benchmarking methodologies measuring accuracy, speed, safety, and specialized domain performance.

Leading Leaderboards in 2025:

- Open LLM Leaderboard: Evaluates open-source models using MMLU (Massive Multitask Language Understanding) and other comprehensive benchmarks

- LMSYS Chatbot Arena: Employs human judges comparing model outputs side-by-side for creative and conversational tasks

- Artificial Analysis LLM Leaderboard: Compares 30+ models across intelligence, price, latency, and context window dimensions

- Vellum AI Leaderboard: Focuses on enterprise-relevant benchmarks including production performance and reliability

Critical Performance Metrics Explained

Accuracy: Percentage of correct answers on standardized tests. Benchmark tests like MMLU (with 14,000+ questions spanning 57 academic disciplines) measure multitask language understanding. Higher scores indicate superior knowledge breadth and reasoning capability.

Latency: Time elapsed from query submission to first token generation. Measured in milliseconds, latency affects real-time applications like chatbots and customer service systems. Lower latency (under 500ms) provides superior user experience.

Throughput: Number of tokens (roughly word fragments) the model generates per second. Higher throughput enables handling more concurrent users without performance degradation. Enterprise applications require throughput of 50+ tokens per second.

Context Window: Maximum information tokens the model can process simultaneously. Larger context windows (measured in thousands of tokens) support analyzing longer documents, maintaining conversation history, and handling complex multi-part queries. 2025’s frontier models reach 2 million tokens, enabling book-length document processing.

Reasoning Capability: Performance on problems requiring multi-step logic, mathematical computation, and abstract thinking. Measured through specialized benchmarks like GPQA (graduate-level questions) and SWE-Bench (software engineering tasks), reasoning benchmarks show the highest variance between models.

AI Model Features: Side-by-Side Comparison

| Feature | GPT-5 | Claude 4 | Gemini 2.5 Pro | DeepSeek |

|---|---|---|---|---|

| Multimodal (Text, Images, Video) | Text + Images | Text only | ✓ Full Multimodal | Text + Images |

| Web Search Integration | Requires plugin | No | ✓ Native | No |

| Custom Instructions | ✓ | ✓ | ✓ | Limited |

| Reasoning Mode | ✓ o1/O3 | ✓ Extended Thinking | Thinking Mode | ✓ |

| Fine-Tuning Available | ✓ | ✓ | Limited | ✓ |

| API Access | ✓ | ✓ | ✓ | ✓ |

| Open Source Version | No | No | No | ✓ |

Open-Source vs. Proprietary AI Models: Strategic Comparison

Open-Source AI Advantages

Open-source models like Llama 3.2, Mistral, and DeepSeek provide complete transparency, full customization capabilities, and zero usage fees. Organizations gain complete model ownership, enabling deployment across distributed infrastructure without cloud vendor lock-in. Academic institutions and privacy-sensitive organizations particularly benefit from auditability and local deployment options.

Cost Reality: While eliminating per-token fees, open-source models require infrastructure investment. Organizations must provision GPUs or TPUs (specialized processors), hire infrastructure engineers, and manage technical complexity. True cost calculation includes hardware depreciation, electricity, and personnel expenses.

Proprietary AI Benefits

Closed-source commercial models (GPT-5, Claude, Gemini) eliminate infrastructure requirements, provide 24/7 enterprise support, guarantee uptime SLAs, and continuously improve through provider updates. Users access cutting-edge capabilities without hardware investment or technical burden.

Trade-off: Proprietary models involve ongoing subscription costs, data privacy considerations (providers may log queries for improvement purposes), and dependency on vendor stability. Users accept vendor-imposed limitations on customization and access to model internals.

Hybrid Approach: The 2025 Trend

Progressive organizations employ hybrid strategies: using open-source Llama 3.2 for confidential internal processing while leveraging GPT-5 API for specialized tasks requiring maximum capability. This balanced approach optimizes cost while maintaining flexibility.

Pricing Analysis: Making Cost-Effective Decisions

Understanding Token-Based Pricing

All major AI providers employ token-based billing: queries are measured in tokens (roughly 4 characters or 0.75 words per token). Different models charge different rates—expensive models cost more per token but may deliver superior quality reducing required revisions.

Cost Optimization Strategy: Calculate total cost including input and output tokens. Many teams waste money using expensive models for simple tasks solvable by budget alternatives. Conversely, attempting complex reasoning with cheap models often requires multiple expensive revisions.

Real-World Cost Scenarios

Scenario 1: Processing 1 Million Queries Annually

Assuming average 500 input tokens and 800 output tokens per query:

- GPT-5: $4,625 annually

- Claude Opus 4: $900 annually

- Gemini 2.5 Flash: $120 annually

- DeepSeek: $28 annually

- Self-hosted Llama 3.2: ~$3,000 hardware + $0 queries = $3,000 for comparable annual cost

Scenario 2: Complex Reasoning Tasks (2,000 input, 1,500 output tokens)

- GPT-5 with reasoning: $35 per query

- Claude Opus 4: $3 per query

- DeepSeek R1: $0.10 per query

AI Comparison by Specialized Domains

Best AI for Scientific Research & Academic Tasks

Gemini 2.5 Pro dominates scientific applications through its 2-million-token context window enabling entire research paper analysis within single interactions. Claude Opus 4’s exceptional reasoning capability and minimal hallucination rate make it ideal for peer-reviewed publication assistance. GPT-5 provides balanced scientific capability at lower cost than Claude.

Best AI for Programming & Software Engineering

Claude Opus 4 achieves 93% scores on real-world coding benchmarks, producing production-ready code with superior architecture and security considerations. GPT-5 ranks very close behind with comparable capability at lower cost. For budget-constrained teams, Gemini 2.5 Flash delivers 85% of premium performance at 2-5% of the cost.

Best AI for Customer Service & Support

Specialized models like specialized fine-tuned Claude or GPT variants excel in customer-facing applications through consistent tone, extensive context retention, and instruction-following precision. Latency requirements demand fast models like Gemini 2.5 Flash or Claude Haiku for real-time interaction.

Best AI for Creative Content & Marketing

GPT-5 dominates creative tasks through superior output variety, emotional intelligence, and stylistic flexibility. Grok-3 excels in humor-infused content and real-time cultural relevance. Claude provides alternative excellence in sophisticated, literary-quality writing.

Best AI for Legal Document Analysis

Claude Opus 4’s conservative accuracy and legal knowledge, combined with the 200K token context window, enable comprehensive contract and regulation analysis. Gemini 2.5 Pro’s extended context window handles document-heavy analysis. Both models incorporate appropriate caution about legal limitations.

Emerging Trends in AI Comparison for 2025

AI Agents Benchmarking Revolution

2025 has witnessed the emergence of AI agents—autonomous systems capable of planning, using tools, and executing multi-step workflows. Benchmarking has evolved from single-turn Q&A evaluation to comprehensive agent evaluation across real-world scenarios. Leading benchmarks like WebArena test agents’ ability to complete complex tasks across the internet.

Speed and Efficiency Outpacing Capability

Contrary to previous scaling assumptions, 2025 data demonstrates that speed and efficiency increasingly matter more than raw capability. Smaller, highly-optimized models like TinyLlama (1.1B parameters) operate on smartphones and edge devices while delivering surprising performance. This democratization enables deployment in privacy-sensitive and resource-constrained environments.

Multimodal Convergence

The industry shift toward native multimodality—simultaneous text, image, video, and audio processing—continues accelerating. Gemini’s 2-million-token window combined with video understanding represents the frontier, reshaping expectations for model versatility.

Real-Time Integration Demands

Models with integrated web search (Gemini, Perplexity, Grok) address critical hallucination problems by grounding responses in current information. This capability becomes increasingly essential as users demand current event knowledge and real-time information within AI responses.

Specialized Model Proliferation

Rather than single general-purpose models, organizations increasingly deploy specialized models for coding (CodeLlama variants), creativity, scientific reasoning, and domain-specific tasks. Mix-and-match strategies optimize cost, performance, and specialization simultaneously.

How to Choose the Right AI Model for Your Needs

Step 1: Define Your Primary Use Case

Clearly specify whether you require general-purpose capability, domain expertise (coding, research, creative), real-time information, or specialized features (video understanding, audio processing). This single decision determines half your evaluation criteria.

Step 2: Establish Performance Thresholds

Determine minimum acceptable accuracy, latency, and throughput requirements. Different applications demand different specifications. Customer service systems require <500ms latency while batch research processing tolerates seconds of delay.

Step 3: Calculate Total Cost of Operation

Beyond per-token pricing, include infrastructure costs, employee training, integration complexity, and support expenses. For high-volume operations, conduct break-even analysis between commercial services and self-hosted open-source models.

Step 4: Evaluate Vendor Stability & Support

For business-critical applications, vendor reputation, SLA guarantees, and support responsiveness matter significantly. Mature providers like OpenAI, Google, and Anthropic offer enterprise contracts with uptime guarantees. Early-stage providers may offer better pricing but carry higher risk.

Step 5: Test Before Full Deployment

Never commit to exclusive vendor relationships without pilot testing. Most providers offer free trials or credits. Run identical test queries across competing models, measuring quality, cost, and speed. Real-world performance often differs from benchmark scores.

Step 6: Monitor and Optimize Continuously

AI model landscapes evolve rapidly. Quarterly reassessment of model performance, pricing changes, and new releases ensures continued optimization. Yesterday’s optimal choice may become suboptimal as new models emerge and pricing shifts.

Recommend: GPT-5 + Claude Opus 4 hybrid approach

✓ Maximum reliability and support

✗ Higher costs than alternatives

Recommend: Gemini 2.5 Flash or DeepSeek

✓ Excellent cost efficiency

✗ Slightly lower performance than premium

Recommend: Gemini 2.5 Pro + Claude

✓ Extended context for document analysis

✗ Specialized use cases may need alternatives

Recommend: Self-hosted Llama 3.2

✓ Complete data sovereignty

✗ Infrastructure management required

Common AI Comparison Mistakes to Avoid

Mistake 1: Selecting Based Solely on Benchmarks

Benchmark scores represent controlled laboratory conditions rarely matching real-world applications. A model scoring 2% higher on academic benchmarks may provide inferior results for your specific use case. Real-world testing trumps abstract metrics.

Mistake 2: Ignoring Total Cost of Ownership

Per-token pricing tells an incomplete cost story. Infrastructure, integration, training, and support expenses often exceed API costs. Calculate comprehensively before selecting models based on rate cards.

Mistake 3: Treating Models as Interchangeable

Claude consistently produces different outputs than GPT-5 despite similar benchmark scores. Models exhibit distinct personality traits, safety characteristics, and reasoning patterns. Testing with actual queries reveals differences invisible in benchmarks.

Mistake 4: Overlooking Emerging Alternatives

The AI landscape evolves rapidly. Evaluating only established providers ignores emerging options offering superior cost-efficiency or specialized capabilities. Quarterly market reviews identify new opportunities.

Mistake 5: Ignoring Infrastructure & Integration Complexity

Even technically superior models provide little value if integration complexity exceeds organizational capacity. Evaluate implementation requirements alongside performance metrics.

The Future of AI Comparison: What to Expect Beyond 2025

Specialized Model Ecosystem Maturation

Rather than competing on single general-purpose model capability, providers will increasingly offer specialized variants for coding, scientific reasoning, creative tasks, and domain-specific applications. Organizations will adopt portfolio strategies deploying different models for different functions.

Standardized Evaluation Frameworks

Industry convergence around standardized benchmarks and evaluation methodologies will enable more transparent, reproducible comparisons. Current evaluation fragmentation will gradually consolidate around accepted standards.

Cost Compression Acceleration

Historical trends suggest continued cost reduction through efficiency improvements and competitive pressure. Expensive capabilities today become commoditized tomorrow. Organizations should design architectures assuming dramatic future price decreases.

Multimodal Sophistication Expansion

Native multimodality will expand beyond text, images, and video to include real-time audio streams, sensor data, and specialized formats. This evolution enables new applications currently impossible with text-only systems.

Real-Time Knowledge Integration Standard

Grounding AI responses in real-time information will transition from specialized feature to industry standard. Hallucinations stemming from knowledge gaps become increasingly unacceptable as benchmarking standards evolve.

Conclusion: Making Informed AI Model Decisions in 2025

The artificial intelligence landscape in 2025 presents unprecedented opportunities alongside genuine complexity. Rather than a single “best” model, the optimal choice depends on specific requirements, budget constraints, technical capabilities, and use-case demands.

Key Takeaways: OpenAI’s GPT-5 maintains market leadership through unmatched versatility and user adoption. Claude Opus 4 delivers premium accuracy and reasoning—especially valuable for complex professional tasks. Gemini 2.5 Pro’s extended context window and multimodal capabilities excel for research and document analysis. DeepSeek and open-source Llama 3.2 provide exceptional value for cost-conscious organizations. Grok offers unique real-time integration capabilities.

Actionable Recommendation: Begin with pilot testing using free tier or trial credits. Run identical queries across 2-3 competing models, measuring quality, latency, and cost for your specific application. This empirical approach reveals performance differences benchmarks miss. For ongoing optimization, reassess quarterly as new models emerge and pricing evolves.

The rapid evolution of AI capabilities creates both opportunities and risks. Organizations embracing systematic model evaluation, continuous monitoring, and portfolio diversification position themselves to capture competitive advantages while managing technological disruption. The future belongs not to those choosing a single “best” model, but to those developing organizational capabilities for continuous model assessment and strategic deployment.

Final Thought: In an era where AI capabilities evolve faster than any individual can track, systematic comparison methodologies and regular reassessment become essential business practices. The organizations that thrive will be those that treat AI model selection not as one-time decision but as continuous strategic optimization aligned with business objectives, technical capabilities, and financial constraints.